91�ȱ� Research & Development are researching the use of artificial intelligence (AI) techniques to create Intelligent Video Production Tools. In collaboration with the 91�ȱ� Natural History Unit, we have been working closely with the Watches team (who produce the Springwatch, Autumnwatch and Winterwatch programmes) to test the tools we have developed. Over the last three series, our system monitored the Watches’ remote cameras for activity, logging events and making recordings of moments of potential interest.

The automated camera monitoring we can offer is particularly useful for natural history programming since the animals appear on their own terms, and the best moments are often unexpected. It is helpful to have an automated eye watching over the live footage, to ensure those moments are captured.

Our previous blog post on this subject covered the development of our tools for Springwatch and the shift to a cloud-based remote workflow. Since then, we’ve continued to develop and polish the tools for their use in Autumnwatch 2020 and Winterwatch 2021.

Algorithmic Improvements

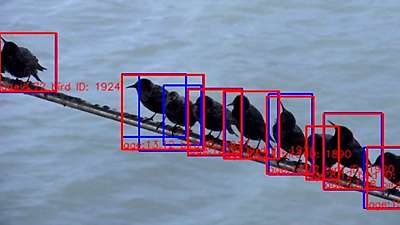

We've spent time researching various areas of potential improvement. One of these areas is object tracking. While our machine learning models could detect multiple objects of interest in the scene on a per-frame basis (in this case, animals), it can be very helpful to be able to track those objects between frames. This allows the generated metadata to represent what is going on in a particular scene more clearly. Duncan Walker researched and implemented a selection of object tracking algorithms during his team placement as part of his time on our graduate scheme. This was effective and was certainly tested to the limit with the starlings filmed during Winterwatch (as seen below)!

Starlings at Aberystwyth pier are detected and individually tracked by our analysis system.

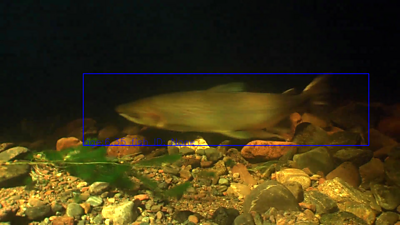

The most recent Winterwatch offered a wide variety of cameras, including the pier in Aberystwyth, the River Ness, and the New Forest. The River Ness provided the opportunity to view footage from cameras placed underwater and gave us the chance to try our system out on fish. We trained a new detection machine learning model for the underwater scene, which successfully detected salmon as they swam upstream.

A salmon is detected in footage from underwater cameras in the River Ness.

Recording so many moments containing wildlife activity presents new challenges. The most interesting clips - the ones of value to production teams - should be presented, so producers don't get buried by many other clips. We've been looking at ways to help solve this, from statistical approaches based on the metadata we generate to more abstract machine learning methods.

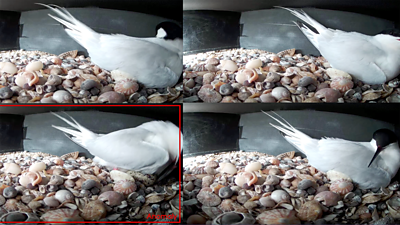

One effective approach was that of an 'Anomaly Detector', which was tested on Springwatch footage. Essentially, feature vectors are generated from each recording. They are evaluated to determine whether the recording fits the general trend of those previously seen from a particular camera or whether it is an 'anomaly', and therefore more likely to be of interest. This approach could select clips of interest, based loosely on the animals' actions and movement, not merely on their presence. One success story from this approach was seen when running it on footage of a pair of terns. The anomaly detector picked out clips featuring parental behaviour such as turning an egg over or the brooding and non-brooding partners swapping places, which were both much more interesting than many of the other clips recorded in the tern box.

A clip featuring egg-rolling is picked out of several others by our anomaly detection system as being of potential interest.

- 91�ȱ� R&D - Using AI to Monitor Wildlife Cameras at Springwatch

- 91�ȱ� Winterwatch - Where birdwatching and artificial intelligence collide

- 91�ȱ� R&D - Cloud watching: moving Springwatch remote wildlife cameras into the cloud

Infrastructure Improvements

As mentioned previously, in the run-up to Springwatch 2020, we had to transfer our toolkit to a remote workflow in the cloud rather than using hardware on-site. During Winterwatch 2021, we transferred some external cloud-based infrastructure elements to use some of the Computing and Networks at Scale (CANS) team's resources instead. This reduced our running costs, and we will be looking at ways to increase this project's usage of the CANS team's system in the future.

We also made some major changes to our core analysis framework after Springwatch. We refactored and streamlined our core code to improve performance and reliability and increase flexibility. We also integrated with the Cloud-fit Production team's media store services. This meant that we could offload the video ingest and recording to Cloud-fit's tools rather than our analysis code ingesting all footage and holding the responsibility for recording moments of interest. This approach's benefit was that the Cloud-fit system constantly recorded all footage from the feeds we were analysing, and our analysis tools could refer to footage ingested over any time range. This allowed us to provide some custom functionality for the digital team, such as recording a beautiful time-lapse or creating custom length clips. The quality of the video and audio recordings were also significantly improved as a result.

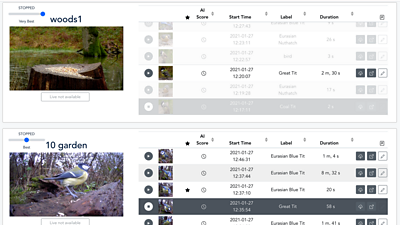

Our user interface for the production team, with new features including highlights, comments, and better time selection.

We made some improvements to the production teams' user interface, including the ability to highlight and comment on clips and a more streamlined way of selecting a date and time range of interest. We also created a new management user interface to start, stop, and modify the analysis on different cameras and control our custom machine learning services. The long-term aim is for this to be a self-service tool for productions, reducing the technical knowledge required to run and maintain it.

What Next?

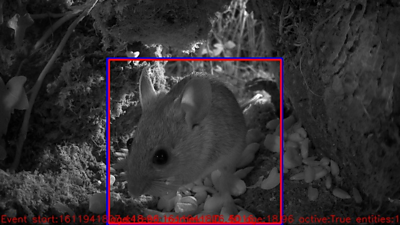

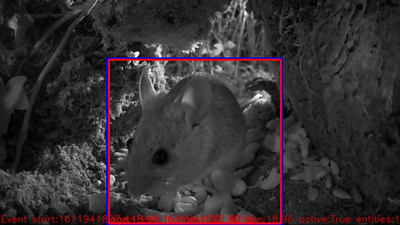

A mouse makes an appearance in a hedgerow during the night on one of Winterwatch’s cameras.

We want to make it as easy as possible for the production teams to get their hands on the clips recorded and selected by this tool. We've run some experiments, successfully transferring the clips generated by our analysis tools to the production system's media servers, and we'll continue to develop integrations with their systems.

The anomaly detection showed significant potential in terms of selecting clips of interest, and this is an area that requires future research and development to improve the effectiveness of our tools. We will also continue to improve the accuracy of the analysis, especially the animal classification, and iterate and improve our tools' user experience and design.

We're excited to explore the potential user-facing experiences that the data generated by our tools may enable us to create. This rich data could offer new insights into the natural world and allow us to share them with our audiences in new and powerful ways.

- -

- 91�ȱ� R&D - Intelligent Video Production Tools

- 91�ȱ� Winterwatch - Where birdwatching and artificial intelligence collide

- 91�ȱ� World Service - Digital Planet: Springwatch machine learning systems

- 91�ȱ� R&D - The Autumnwatch TV Companion experiment

- 91�ȱ� R&D - Designing for second screens: The Autumnwatch Companion

- 91�ȱ� Springwatch | Autumnwatch | Winterwatch

- 91�ȱ� R&D - Cloud-Fit Production Update: Ingesting Video 'as a Service'

- 91�ȱ� R&D - Tooling Up: How to Build a Software-Defined Production Centre

- 91�ȱ� R&D - Beyond Streams and Files - Storing Frames in the Cloud

- 91�ȱ� R&D - IP Studio

- 91�ȱ� R&D - IP Studio Update: Partners and Video Production in the Cloud

- 91�ȱ� R&D - Running an IP Studio

- 91�ȱ� R&D - Interpreting Convolutional Neural Networks for Video Coding

- 91�ȱ� R&D - Video Compression Using Neural Networks: Improving Intra-Prediction

- 91�ȱ� R&D - Faster Video Compression Using Machine Learning

- 91�ȱ� R&D - AI & Auto Colourisation - Black & White to Colour with Machine Learning

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.