For the last few years, the Cloud-fit Production Capabilities team I am part of has been working on software to work with live and stored media in the cloud. Our software recently became mature enough that we would like others to start using it. The restrictions to control the coronavirus pandemic meant many productions switched from working on-site with traditional broadcasting tools to remote working and cloud-based tools. As one such programme went through these changes, we found a suitable opportunity in another 91�ȱ� Research & Development team who work closely with them.

The Springwatch, Autumnwatch, and Winterwatch programmes (collectively known as the 'Watches') are usually run from a small paddock of outside-broadcast trucks at some remote location in the British Isles. Starting with Springwatch 2020, the team temporarily moved to work from home using cloud-based production. A minimal crew remained to operate the cameras safely.

The Watches feature rarely seen views of animals from around the British Isles in their natural habitats. To achieve this, wildlife cameras are installed in carefully chosen locations and are monitored for animal activity. 91�ȱ� R&D's Intelligent Production Tools team (the analysis team) has been providing the Watches with tools to assist in finding interesting footage amongst the many hours of recordings. Previously, the analysis team would work alongside the rest of the team in the field, running their analysis on powerful computers. When the production moved to the cloud, so too did the analysis work.

Moving both production and analysis work to the cloud for Springwatch was done at relatively short notice. After Springwatch had concluded, the analysis team wanted to spend less time managing their cloud platform and more time working towards their research goals. Migrating their analysis software onto our software platform was a sensible next step. Our software has been carefully designed with cloud computing characteristics in mind, which has led to several innovative steps. We have created a media store, and file-based input and output.

This post delves into more detail about this collaboration between two R&D teams. I'll discuss how the cloud-based production system changed and its benefits for both the analysis team and the content of Autumnwatch 2020 and Winterwatch 2021. I'll touch briefly on some of the problems we encountered and discuss our plans for the future.

The existing design

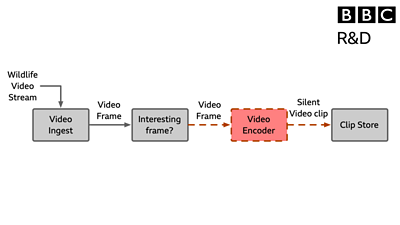

The cloud-based system built by the analysis team worked like this:

Each incoming video stream is analysed, frame-by-frame, and if it contains some activity the algorithm decides is interesting, then a recording starts. When the activity stops, the recording stops and the clip is exported. The video clip is made available to the production team, along with some useful data generated by the analysis. A simplified outline of this system is shown in the diagram above.

This system was successful, and the clips it produced were used during Springwatch 2020. However, there were some shortcomings. The clips contained no sound, so a member of the production team must manually add some audio or a note indicating that the clip has no sound. This extra work means these clips are less likely to be used.

It was also not possible to make the clips longer. While this might not seem like a significant problem, editors would sometimes ask for clips with an extra few seconds of inactivity at the beginning or the end, for example, to set the context for an animal coming into the frame.

Migrating Autumnwatch onto Cloud-Fit

To make the analysis work on Cloud-fit's platform, both teams needed to make changes. The most significant new feature we added was a script to ingest the wildlife camera streams into our media store. This script was named 'Juvenile Salmon' to keep with Cloud-fit's animal-themed naming convention and to reflect its relative immaturity.

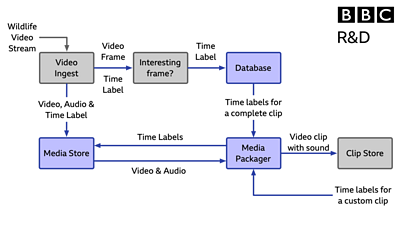

Creating a script rather than a full-blown service was necessary, given the limited amount of time available. The analysis team modified a version of this script so that video frames could be sent for analysis. Our media packaging service (named 'Bobcat') makes clips from the media in the store.

The modified system is shown in the diagram above. Although this system looks more complicated, it is significantly more useful. Now, instead of a fixed set of silent video clips, the production staff (and anyone else who has access to the system - more on that in a moment!) have access to clips and also data describing how this clip was made.

Now producers can create a longer clip to show the scene before an animal emerges, or join together two animal clips showing one animal leaving a scene and another arriving, and include the inactivity in between. Or they can request any other section of recorded video, like this sunset, made for Winterwatch in January 2021.

Winter skies are just beautiful! ❄️殺 Check out this beautiful sunset time-lapse from the New Forest! 懶#Winterwatch ❄️

— 91�ȱ� Springwatch (@91�ȱ�Springwatch) February 5, 2021

How does this work? When the analysis decides a video frame contains something interesting, only the time label for that frame is recorded, not the video frame itself. The media store records all the video and audio and can provide audio and video on request for an exact time range. A video clip is created automatically if most of the video frames are labelled as interesting in a particular time range. As before, this clip is made available to the production team along with some data derived during the analysis process.

Trial by wildlife

The modified system was trialled during Autumnwatch 2020 and Winterwatch 2021, and it has been a great success. While our ambitions were modest (don't lose any data, keep the services running), the Watches producers found the services reliable, and the new features were well received and well used. Producing clips with sound meant that the extra steps described earlier can be avoided, and the broadcast and digital editors can use our automatically generated clips as if they had come from a production team.

We're delighted that things worked reliably, though we did need to make a few changes. These all stemmed from problems related to inconsistencies in the timing or metadata in the incoming wildlife camera streams. Our services had been designed with studio working as the primary use-case and did not account for the kinds of common problems when working with streams coming over the internet from remote locations. It was a testament to our robust, modular design that the changes needed to deal with these problems could be added straightforwardly. The Juvenile Salmon ingest script also worked well, although it will need further work to turn it into a fully-fledged resilient service.

Some words from Matthew Judge, from the analysis team and who works directly with the production team:

- "Having the ability to dynamically transcode clips of any length allowed us to create media that suited the digital production team's specific needs, including pre- and post-roll. This was also used for bespoke requests, such as generating time-lapses.

- Being able to generate longer clips meant we could experiment by creating 'highlight reels', and generate miscellaneous clips on request. The inclusion of audio meant that clips could be used in the same way as other media recorded by the production team.

- The use of the Cloud-fit infrastructure and services meant that we could concentrate on the performance and effectiveness of our artificial intelligence system without having to worry about the overhead of recording media."

Earlier I mentioned other people having access to this data besides the production team. A colleague working in object-based media research used the data about which species of bird were visible in a particular frame to produce a bird identification quiz using our StoryKit platform. Although he used clips that had already been made for Autumnwatch 2020, he could have requested custom clips in the same way as the Watches producers.

Conclusions and future plans

This post has described the successful migration of the automated analysis of wildlife cameras onto the Cloud-fit media platform. The service ran without interruptions during both Autumnwatch and Winterwatch, and the clips produced by the system included audio, which improved on the earlier version of the system.

The analysis team spent their time improving the workflow and user experience of their software, presenting data to the producers, which helped them make decisions more efficiently.

For future Watches, we would like to develop the media ingest tool to a full-blown service. We are also keen to deploy our media processing tool. This accesses the media store in the same manner as the media packaging tool. This would lead to a number of potential improvements. I'll discuss two:

First, multiple different kinds of analysis could be run at once. I have been circumspect throughout this post about what the analysis can achieve. I cannot say that the analysis detects "an interesting animal moving" because that is a very difficult task for a machine to perform. But there are different methods for detecting things like moving animals, and they go wrong in different ways - for example, see the picture above. By combining different methods and making decisions about which clips to produce based on what they all think, we may get much closer to a system that really does pick out all the times where there is "an interesting animal moving" and nothing else.

Second, tools could be made to change the audio and video. We might want to highlight something hard to see (such as darkening most of a video to emphasise a small creature) or remove unwanted sound like traffic noise or speech.

As a final note, this successful test gives my team confidence both in our innovative approach to cloud-based media processing and in our prototype services. We'd like to make our prototypes available to more productions to continue our research into the capabilities that best serve the 91�ȱ�'s needs in future.

- -

- 91�ȱ� R&D - Intelligent Video Production Tools

- 91�ȱ� Winterwatch - Where birdwatching and artificial intelligence collide

- 91�ȱ� World Service - Digital Planet: Springwatch machine learning systems

- 91�ȱ� Springwatch | Autumnwatch | Winterwatch

- 91�ȱ� R&D - Cloud-Fit Production Update: Ingesting Video 'as a Service'

- 91�ȱ� R&D - Tooling Up: How to Build a Software-Defined Production Centre

- 91�ȱ� R&D - Beyond Streams and Files - Storing Frames in the Cloud

- 91�ȱ� R&D - IP Studio

- 91�ȱ� R&D - IP Studio Update: Partners and Video Production in the Cloud

- 91�ȱ� R&D - Running an IP Studio

-

Automated Production and Media Management section

This project is part of the Automated Production and Media Management section