Professional, live, multi-camera coverage isn’t practical for all events or venues at a large festival. For our research at Edinburgh Fringe Festival 2015, we experimented with placing three unmanned, static, ultra high definition cameras and two unmanned, static, high definition GoPro cameras around the circumference of the 91�ȱ� venue. A lightweight video capture rig of this kind, delivering images to a cloud system, could allow a director to crop and cut between these shots in software, over the web and produce good quality coverage ‘nearly live’ at reduced cost.

Project from 2014 - present

What are we doing?

By moving from completely live to 'Nearly Live' production, we believe that live event coverage can be made more accessible to smaller crews with limited budgets and will simplify production for novice users. We want to increase the quantity of coverage from large-scale events like the Edinburgh Festival and thus allow technical and craft resources to be deployed much more widely.

We are focusing on the construction and testing of a new production workflow in which the traditional roles of director, editor, vision-mixer and camera operator are combined into a single role offering cost savings and efficiencies. The operator can perform camera cuts, reframe existing cameras and edit shot decisions to deliver an "almost live" broadcast. The production tool is inherently object-based because it preserves all original camera feeds and records all edit decisions. This permits us to create new types of multi-screen, multi-feed experiences for audiences.

We are also investigating cheaper technologies permitted by the shift from live to nearly live production. We want to understand whether technologies used for distribution and playback of media over the Internet can also be used at the authoring stage to streamline workflows and give producers an accurate sense of what the final experience will be.

We are conducting a number of user tests to evaluate the workflow and with a view to creating a second iteration of the system. We are also working to add nearly live production capabilities to the IP Studio platform. In addition, we are collaborating with Newcastle University to integrate aspects of the workflow into , a video crowd-sourcing system which they have developed. Bootlegger orchestrates the capture of live events according to a shoot template by telling participants when, where and what to capture using an app on their phone.

How does it work?

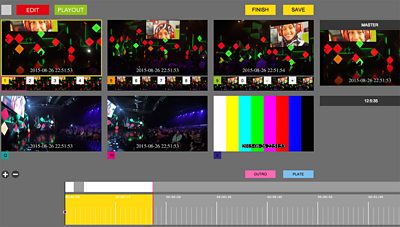

Our prototype Nearly Live production tool, codenamed Primer, presents the operator with a single-screen interface built as a web browser application. It is similar in nature to a clean switcher, selecting a camera to be 'live' as the action unfolds but also features a broadcast preview.

A session server manages the application's state and allows multiple tools for different facets of production to be operated on concurrently as part of a collaborative workflow.

A customised version of the Platform Live Tool is used to for each of the static UHD cameras. Low-resolution video representations are generated from those feeds and rendered in the web application to ensure bandwidth and performance are managed. The operator can create and quickly switch between reframed pre-sets for each UHD camera feed which appear as addressable virtual cameras in the user interface.

The user can pause the action at any point in time and seek back through the session to fine tune edit decisions using a visual representation of the programme timeline. On resuming, the play-head seeks forward to the end of the timeline. This functionality is made possible by ensuring the time shift window (DVR window) of the camera feed is infinite so that all live footage is recorded and can be randomly accessed by seeking.

Once the operator has established a sufficient buffer of edit decisions, they can begin broadcasting them. A second play head begins advancing along the timeline, transmitting each shot change to a server for baking into a broadcast stream. The baking process can either generate a MPEG2 transport stream, or broadcast an object-based description of the curated programme for compositing directly on the client playback device. The latter also requires MPEG-DASH segments to be incrementally uploaded to a CDN origin server.

A research goal is to investigate how big the window of time should be between the broadcast play head and edit play head to ensure the operator has enough time to perform edits without feeling rushed and whilst keeping the programme as close to a live broadcast as possible. We call this the ‘near-live window’.

A consequence of pausing the action and making edit decisions whilst broadcasting is that the near-live window gets shorter. Eventually, the broadcast play head will catch-up with the edit play head, leaving the operator no time to edit any more camera decisions. At this point, the functionality degenerates into a simple clean switcher. The ideal window size is still under investigation but will largely depend on context. For example, the size will be dictated to a large extent by the requirements of the production itself and how quickly the live broadcast needs to go out to audiences.

A graph of audio amplitude is attached to the timeline to help the operator identify events in the recordings. The master audio track is taken from a single camera or mixing desk and is used as the authoritative time source.

Latency and synchronisation are two big technical challenges for live IP production. Nearly live production side steps these issues by allowing an arbitrary delay between capturing, editing and broadcasting the content. This delay can be chosen to trade-off edit window size for latency of broadcast.

We can't yet guarantee frame-accurate synchronisation of multiple camera feeds using current web browser media engines, although we can get very close. We are embracing a "worse is better" philosophy here because of the huge benefit of increasing large-scale event coverage. In practice, the lack of synchronisation isn't a distraction to the nearly live operators and lip-sync is preserved.

Why does it matter?

It is difficult to give large, multisite events like the Edinburgh Festival the comprehensive coverage they deserve because traditional production workflows, requiring large production crews, don't scale to fit hundreds of venues and thousands of performances. There are hundreds of Fringe venues and street performers that can't be covered by the 91�ȱ�, so a full festival experience is only accessible to people who can attend in person and a large number of performers are not represented in the 91�ȱ�'s coverage.

Outcomes

Our goal is to provide an open source SaaS (Software as a Service) that can be hosted privately or in the cloud. This will include session management, authentication and production/broadcast tooling using the web technology stack. By making this work publically available, we aim to build a community of practice around nearly live event coverage and to allow communities, groups and individuals to become broadcasters themselves and help contribute towards continued development.

The technology will also support Bootlegger, Newcastle University's crowd-sourced video production project.

Next steps

91�ȱ� R&D is going back to Edinburgh Festivals in 2016 to trial several new technologies for near-live production that have been developed through a collaboration between our UX and APMM teams. This includes an enhanced version of IP Studio supporting session management, live DASH feed generation, edit timeline capture and real-time composition.

We will also be testing a miniaturised IP Studio instance with the aim of running productions from different-sized venues at Edinburgh. This is part of an experiment to investigate trade-offs in quality and latency resulting from limited Internet connectivity and less than ideal production conditions. In particular, we are investigating new workflows for rapid production setup and teardown.

In readiness for the summer, we’ll also be rolling some improvements into the Primer UI as a result of feedback from our user tests in January. Primer is just our first prototype tool for nearly-live production, and we’ll be planning additional, complementary near-live tools for other activities in the near-live window, such as audio editing and meta-data entry. We’ll also be looking at collaborative tooling technologies to see if they can be applied to distributed, collaborative timeline editing.

Related links

- -

- 91�ȱ� R&D - High Speed Networking: Open Sourcing our Kernel Bypass Work

- 91�ȱ� R&D - Beyond Streams and Files - Storing Frames in the Cloud

- 91�ȱ� R&D - IP Studio

- 91�ȱ� R&D - IP Studio: Lightweight Live

- 91�ȱ� R&D - IP Studio: 2017 in Review - 2016 in Review

- 91�ȱ� R&D - IP Studio Update: Partners and Video Production in the Cloud

- 91�ȱ� R&D - Running an IP Studio

- 91�ȱ� R&D - Building a Live Television Video Mixing Application for the Browser

- 91�ȱ� R&D - Nearly Live Production

- 91�ȱ� R&D - Discovery and Registration in IP Studio

- 91�ȱ� R&D - Media Synchronisation in the IP Studio

- 91�ȱ� R&D - Industry Workshop on Professional Networked Media

- 91�ȱ� R&D - The IP Studio

- 91�ȱ� R&D - IP Studio at the UK Network Operators Forum

- 91�ȱ� R&D - Industry Workshop on Professional Networked Media

- 91�ȱ� R&D - Covering the Glasgow 2014 Commonwealth Games using IP Studio

- 91�ȱ� R&D - Investigating the IP future for 91�ȱ� Northern Ireland