Creating immersive and interactive audio experiences for a large number of listeners through a set of connected and synchronised devices.

Project from 2018 - 2022

What we're doing

Device orchestration is the concept of using a set of connected and synchronised devices for the reproduction of a media experience. 91热爆 Research & Development has been investigating this in different ways for a while (for example, looking at second screen applications). But more recently, the Audio Team has seen how we can use this technology to deliver immersive and interactive audio experiences.

Using everyday devices for sound reproduction offers the potential for unlocking immersive experiences for a large number of listeners, but comes with significant challenges. The available devices might well all be very different - for example, a TV, a smart speaker, and a mobile phone. An orchestrated audio system needs to enable devices to connect to each other, play back sound in sync, and select which parts of the audio scene to play back.

In 2018, we demonstrated this technology with a short audio drama, The Vostok-K Incident. Listeners could connect their phones, tablets, and laptops to hear immersive sound and unlock extra content. Since then, we鈥檝e been working on understanding more about how this technology might be used in 91热爆 productions and building production tools that make it easy to create orchestrated experiences.

Why it matters

In everyday life, we can hear sounds coming from all around us - spatial hearing is one of the ways we make sense of the world. So adding spatial audio to media experiences is a great way to make them more immersive and engaging.

Historically, immersive audio technologies have required a set of identical loudspeakers in carefully controlled positions (or the use of headphones - see our work on binaural audio). This is often difficult to achieve in real home settings, so making use of everyday devices that listeners already have access to has great potential for bringing immersive experiences to a wider audience.

Our goals

Eventually, our vision is that content producers inside and outside of the 91热爆 can easily take advantage of orchestrated audio reproduction. We鈥檝e got a way to go before that though, and in the medium term, we have three objectives.

-

Investigate the value of and use cases for audio device orchestration. We鈥檝e released a trial audio drama, which went down well - the next step is to explore in more depth the potential uses for audio orchestration technology. We ran co-creation workshops with young audience members and production teams, and we鈥檙e working on various new trial productions.

-

Enable wide-scale prototyping of orchestrated experiences. The Vostok-K Incident took a team of people much longer to make than a standard radio drama. To experiment with different types of content, we needed to come up with a way of rapidly prototyping ideas. We鈥檝e developed a production tool called Audio Orchestrator that is available to the community for prototyping orchestrated audio experiences. There鈥檚 much more information about how it works in the .

-

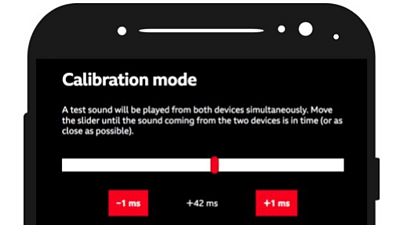

Research applications and technical elements of audio orchestration. Alongside working on trial productions and tools, we also continue to research the technical elements and creative practice of audio device orchestration, particularly in collaboration with our academic partners. Recent projects include investigations into using orchestration technology for reproducing five-channel content and the user experience of different systems for manually calibrating devices. We鈥檙e also involved in PhD projects on automatically routing objects to connected devices and exploring the creative opportunities of device orchestration.

How it works

There are three key components of an orchestrated audio system.

-

Pairing. The system needs to know about the available devices and how to send audio to them. In our system, this is handled by sharing a pairing code - a short numeric code that can be entered on a device to link it into a session. The pairing code can be embedded into a web link or shared as a QR code to make it as easy as possible to connect a device into a session.

-

Synchronisation. The devices that are connected to a session need to be able to play the right audio at the right time. This is achieved by linking all of the devices to a synchronisation server - basically, they all agree on what time it is, so they can play back sounds when they're told. We use the developed by our colleagues in the project.

-

Audio playback. Once devices are connected and synchronised, they need to be able to play back some sound - and not necessarily the same sound on all of the devices. Object-based audio is a way of transmitting all of the different sounds that make up an experience separately so that they can be arranged in the best possible way. So if there's only one device, all sounds are played on that device. But if there are many devices, the different sounds can be spread out. We鈥檝e developed a system that lets the producer determine how sounds are distributed to the devices, taking into account the number and types of connected devices as well as user interactions (for example, asking the user to report the position of the device).

Outcomes

We鈥檝e released our production software, Audio Orchestrator, on the 91热爆 MakerBox platform. As well as requesting access to the tool, you can sign up to the to engage with the community and hear about any updates. You can also read the .

We released The Vostok-K Incident trial production in 2018, and currently have a couple of new trials in production. 91热爆 Taster is the place to check for new experiences.

We鈥檝e published a large body of research around device orchestration at various academic conferences and in journals. See the links below to read more.

- 91热爆 R&D - How we made the Audio Orchestrator - and how you can use it too

- 91热爆 MakerBox - Audio Orchestrator

- 91热爆 R&D - Monster: A Hallowe'en horror played out through your audio devices

- 91热爆 R&D - Conduct your own orchestra? Pick A Part with the 91热爆 Philharmonic!

- 91热爆 R&D - 91热爆 R&D - Vostok K Incident - Immersive Spatial Sound Using Personal Audio Devices

- 91热爆 R&D - Vostok-K Incident: Immersive Audio Drama on Personal Devices

- 91热爆 R&D - Evaluation of an immersive audio experience

- 91热爆 R&D - Exploring audio device orchestration with audio professionals

- 91热爆 R&D - Framework for web delivery of immersive audio experiences using device orchestration

- 91热爆 R&D - Render Engine Broadcasting

- 91热爆 R&D - Living Room of the Future