We've made an experimental new prototype to help people watch and listen to 91热爆 programmes together even when they are physically apart. It's designed for small groups to watch programmes in a closely synchronised fashion so that you can text, audio or video chat at the same time as watching. We hope people will come together around their favourite programmes, even if they are currently stuck in different locations (because of the current coronavirus lockdown).

Try 91热爆 Together with your friends over at 91热爆 Taster

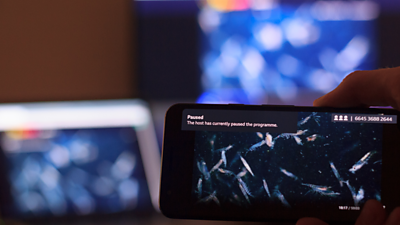

Running a session is as simple as visiting the 91热爆 Together web page, pasting a link to a 91热爆 iPlayer programme, and getting a unique link to send to your friends. Once everyone is in, you can pause or queue up another programme for everybody at any time.

The system is built on top of the 91热爆's Standard Media Player and works with almost any 91热爆 audio or video, so doesn't need any additional software to be installed. Our lookup server parses the pasted link to any 91热爆 website and talks to the relevant 91热爆 system to find out how to play the video or audio content on that page. For now, this works with most 91热爆 iPlayer, Sounds, Bitesize, News, and Sport pages - but let us know if you find any links that don't work as expected!

91热爆 Research & Development has a rich track record in experimenting with object-based, multi-device synchronised experiences which increase immersiveness, interactivity and personalisation. We saw an opportunity to use our expertise in this area to create experiences that could bring people together and engage with 91热爆 programmes. The 91热爆 Together prototype tests our previous research work, but at a larger scale. It allows us to collect valuable audience feedback on these new communal experiences and has allowed us to combine our knowledge, building upon earlier tests and trials. For example:

- We trialled object-based, multi-screen experiences, showing statistics, infographics or alternative camera angles on additional devices as part of our involvement in .

- We used the same synchronisation system for the spatial audio trial The Vostok-K Incident, where multiple devices in the same room can play different parts of an audio drama scene.

- 91热爆 R&D has previously looked at synchronising IP-delivered additional content such as audio description or director's commentary to a main video stream.

We wanted to use our existing research to make something that would help people during the current pandemic. We've worked across three teams in R&D and with colleagues in iPlayer and Sounds to make this prototype and release it for testing. Successfully integrating our technology with the 91热爆 Standard Media Player means we will also be able to carry out synchronised media trials more widely in future.

How it works

A key component of our prototype is a synchronisation service that enables devices across the internet to very accurately synchronise their playback. The service was initially developed and open-sourced as part of :

It works by first getting every participant to synchronise to the server clock ("wall clock" time), and then publishing and subscribing to content timelines derived from this shared understanding of time. The participant devices then report changes in their published timelines via timestamp-containing updates. The server compares timeline updates from all participants which published similar timelines, determines how to reconcile any differences and sends them on to other devices in the session (the timeline subscribers). In lab conditions, this approach can synchronise media to within about 20ms, close enough to show the same frame of video on all devices at the same time.

This is well below the threshold in asynchrony that can be perceived by users from across different locations. For this trial, we expect our prototype to perform much better than if users were to try to synchronise by all trying to hit the play button at the same time. In fact, sync'd playback through 91热爆 Together should be close enough to have a video or voice call at the same time and react to the big reveals in a drama, or enjoy the punchlines in comedy programmes together. We're interested in testing how well our prototype scales, whether it makes for a good experience, and whether this very close synchronisation matters.

On the participant's device, our plugin for the media player runs an adaptation algorithm that compares the current playhead position to the ideal described by the content timeline. It then generates commands to the player - to play, pause, seek, speed up or slow down - to stay in sync with minimal interruption to the end-user.

A particular challenge for the team was designing an acceptable adaptation algorithm to work with DASH playback, where the whole video is not downloaded in advance; every seek command may trigger playback delays as the DASH player buffers the video segments not available in its local cache. We attempted to solve this by minimising the number of big jumps, using small speed adjustments instead where feasible, and by estimating how long the buffering will take. However, this is still not perfect in all situations and may need further research work.

What next?

It is hard to replicate real working conditions, such as load distribution in our experimental environments. We don't know yet how well the various components will scale, and releasing the service in its experimental form is a way we can test this.

We also don't really know if there is an appetite for synchronised audio and video in this form. We think that there might be - there are many "experience together" applications launching, hinting at new, sociable ways to consume content. But we don't know who this might be most useful for, nor which kinds of 91热爆 programmes people want to watch in this way.

It's ready to try out on 91热爆 Taster now, and you can rate it and share it with friends there. We're looking forward to hearing what you think.

- -

- 91热爆 Taster - Try The Vostok-K Incident

- 91热爆 R&D - Vostok-K Incident: Immersive Audio Drama on Personal Devices

- 91热爆 R&D - Immersive Spatial Sound Using Personal Audio Devices

- 91热爆 R&D - Synchronisation to create a Personalised Broadcast

- 91热爆 R&D - Seamless Substitution - Mixing IP and Broadcast Video for Personalised TV

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the 91热爆. Our work focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.

-

Future Experience Technologies section

This project is part of the Future Experience Technologies section