A few months ago I was really happy to announce the open source release of an experimental video library called the , which allows you to edit and composite video dynamically in JavaScript. Today I'm even more excited to announce the release of a new and improved library called the . The VideoContext takes inspiration from the , allowing you to build complex and dynamic video processing pipelines & sequence media playback - all with a straightforward and familiar API.

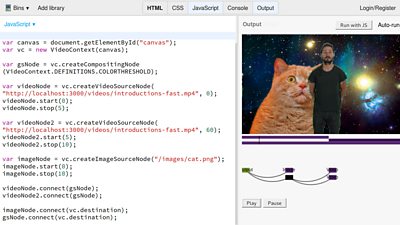

We built this library taking inspiration from the design of the WebAudio API while also incorporating some higher level convenience functions. We hope this provides familiarity for people picking up this library for the first time who have had previous experience in the web audio world. The VideoContext uses a graph based rendering pipeline, with video sources, effects and processing blocks represented as software objects which can be connected, disconnected, created and removed in real time during playback.

Technology

The browser provides a number of individual technologies that can be used for creating interactive video content. The VideoContext brings together WebGL, , and a number of new DOM features provided by the HTML5 spec such as “".

The core of the video processing is all implemented as shaders written in . Built into the library is a range of common effects such as cross-fade, chromakeying, scale, flip, and crop. There's a straightforward JSON representation for effects that can be used to add your own custom ones. It also provides a simple mechanism for mapping GLSL uniforms onto JavaScript object properties so they can be manipulated in real time in your JavaScript code.

This library takes the same pragmatic approach to timing and synchronization that the HTML5-Video-Compositor took. With no guarantees of synchronization between multiple HTML5 video elements we take an approach of doing the best we can with what the browser provides. All the major timing functionality is handled in a requestAnimationFrame loop and the underlying video elements have callbacks listening for stalled states which causes the whole VideoContext to pause.

In practice these techniques make the VideoContext usable in environments with good network speeds (the actual performance will depend on processing graph complexity, network bandwidth, and video resolution).

At current the library is tested and works on newer builds of both Chrome and Firefox on desktop, and with some issues on Safari. Due to several factors the library isn't fully functional on any mobile platform. This is in part due to the requirement for a human interaction to happen with a video element before it can be controlled programmatically.

Next Steps

We're using this library internally to provide client side rendering for our work researching and developing a streamable description for media composition. We're also using it as the rendering engine for a number of demos and experiences we hope to share in the future.We're making this library available as open source to lower the barrier of entry for everyone who wants to create new kinds of content experience. We're excited to see what you will make.

- -